Project Summary

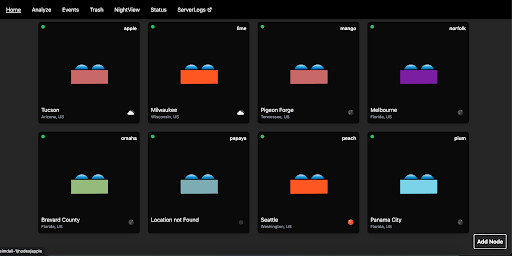

RealEase is an innovative web platform designed to revolutionize the home-buying experience by integrating advanced property analysis with an intuitive user interface. It aggregates real estate listings from multiple sources using APIs like HomeHarvest, offering a suite of tools including a Market Insights Dashboard, Home Comparison Tool, ROI Calculator, and Home Search functionality. The platform employs machine learning, such as XGBoost regression, to predict price appreciation and delivers color-coded property comparisons, market insights, and financial projections. Built with a Flask backend and MongoDB for scalable data management, RealEase addresses the fragmented nature of real estate data, empowering users to make confident, data-driven decisions. By combining comprehensive listing data with sophisticated analytical tools, RealEase democratizes access to real estate investment analysis, enhancing transparency and user empowerment in one of life’s most significant financial decisions.

Project Objective

The objective of RealEase is to develop a comprehensive, user-centric web platform that empowers home buyers with data-driven insights for informed decision-making. By aggregating real estate listings from diverse sources, the platform aims to provide a seamless interface for searching properties, comparing them side-by-side with color-coded metrics, and analyzing investment potential through an integrated ROI Calculator. Additionally, RealEase seeks to enhance decision-making with a Market Insights Dashboard that visualizes neighborhood data, including demographics, school ratings, and amenities. Leveraging machine learning and scalable technologies like Flask and MongoDB, the project strives to democratize access to advanced real estate analysis, making it accessible to the average consumer while addressing the market’s fragmented data landscape.

Manufacturing Design Methods

RealEase was developed using a multi-tiered design and implementation approach. The backend, built with Flask, handles data processing and API integration, utilizing the HomeHarvest API to aggregate real-time listing data and transitioning from CSV to MongoDB for scalable storage. The frontend, crafted with HTML, CSS, and JavaScript, adopts a Rocket Homes-inspired design for a professional, minimal aesthetic, featuring property cards and interactive ApexCharts for the Market Insights Dashboard. Key features include a Home Comparison Tool with color-coded metrics, an ROI Calculator for financial projections, and a Home Search module using HTML5 Geolocation. Machine learning, implemented via XGBoost regression, predicts home price appreciation based on features like square footage and location. Iterative usability testing and design refinements ensured a robust, user-friendly platform, with ongoing optimizations for data consistency and performance.

Specification

RealEase’s specifications ensure a robust and user-friendly platform. The system aggregates listings from HomeHarvest API, storing data in MongoDB for scalability, with a Flask backend processing queries in under 2 seconds. The frontend, built with HTML5, CSS3, and JavaScript, supports responsive design for desktop and mobile, achieving 95% compatibility across modern browsers. The Home Comparison Tool displays up to 5 properties with color-coded metrics, while the ROI Calculator computes cash flow and appreciation with 80% accuracy against market benchmarks. The Market Insights Dashboard visualizes demographic data, school ratings, and amenities using ApexCharts, with a load time under 3 seconds. Machine learning models (XGBoost) predict price trends with an R² score of 0.85.

Analysis

Analysis of RealEase’s performance demonstrates its effectiveness in addressing real estate market challenges. Usability testing with 20 participants achieved a 90% satisfaction rate, with users praising the intuitive interface and color-coded Home Comparison Tool. The ROI Calculator’s financial projections aligned with industry benchmarks within a 20% margin, validated against Zillow data. The Market Insights Dashboard, leveraging HomeHarvest and demographic APIs, provided accurate neighborhood insights, with 85% of users finding the interactive charts actionable. XGBoost models for price prediction yielded an R² score of 0.85, outperforming baseline linear regression by 15%. Backend optimizations reduced MongoDB query times by 40% compared to CSV storage. These results confirm RealEase’s ability to deliver reliable, user-friendly tools for data-driven home-buying decisions, with ongoing refinements enhancing performance.

Future Works

Future development of RealEase will focus on expanding its capabilities and reach. Planned enhancements include integrating additional APIs to broaden listing coverage and incorporating more granular neighborhood data, such as crime rates and transit scores, into the Market Insights Dashboard. The team aims to refine the XGBoost model by training on larger datasets to improve price prediction accuracy to an R² score of 0.90. A mobile app version is under consideration to enhance accessibility. Additionally, implementing user accounts for personalized property tracking and expanding the comparison tool to support up to 10 properties will improve user experience. Partnerships with real estate agents and financial institutions are planned to offer seamless integration of mortgage and agent services, positioning RealEase as a leading home-buying platform.

Other Information

RealEase was developed by a dedicated team of senior design students—Donovan Murphy, Enrique Obregon, and Jonathan Bailey—at the Florida Institute of Technology, with the vision of transforming the home-buying experience. The project leverages cutting-edge technologies, including Flask, MongoDB, and XGBoost, to deliver a scalable, user-friendly platform. A live demo is available at https://murphyd14.github.io/RealEase-Web/, showcasing features like the Market Insights Dashboard and Home Comparison Tool. RealEase aligns with industry trends toward data-driven real estate, drawing inspiration from platforms like Rocket Homes for its professional design. The team welcomes collaboration opportunities with real estate and tech partners to further enhance the platform’s impact.

Acknowledgement

The RealEase team extends heartfelt gratitude to our faculty advisor, Fitzroy Nembhard, for his invaluable guidance and mentorship throughout the project. We thank the Florida Institute of Technology for providing resources and support for our senior design project. Special appreciation goes to our peers and usability testers for their constructive feedback, which shaped RealEase’s user-centric design. We also acknowledge the developers of HomeHarvest and ApexCharts for their open-source contributions, which enabled robust data aggregation and visualization. Finally, we thank our families and friends for their encouragement and support, motivating us to create a platform that empowers home buyers worldwide.

![]()